Lectures and labs

I would like to thank Professor Sariel Har-Peled whose lectures I've based much of my own content on. These lectures are updated every semester to emphasize things I find important and match my voice. Though my lectures have diverged from his, Prof. Har-Peled's lectures are also great.

Also, quick hint, icons are links to slides and video contents (And the "Read Me" link to some quick notes)! By popular demand, we are also including links to Sumedh's (the true OG), discussion recordings!

| Pre-lecture slides | Post-lecture scribbles | Asynchronous lecture video | Live recording | Live recording (Sumedh) |

|---|---|---|---|---|

|

|

|

|

|

|

Lectures

Lecture 0 (Kani) - Course introduction and languages

This is the first lecture of the course. We’ll discuss the course policies and why we model problems as languages.

Lecture 1 (Kani) - Languages and regular expression

We’ll discuss regular languages and representing them as regular expressions (the OG RegEx).

Lecture 2 (Kani) - Deterministic finite automata (DFAs)

Introduction to deterministic finite automata. We’ll also discuss how to use DFAs to prove closure properties.

Lecture 3 (Kani) - Non-deterministic finite automata (NFAs)

Introduction to non-determinism. We’ll talk about how it applies to automata and formalize NFA concepts/definitions.

Lecture 4 (Abraham) - DFA/NFA/RegEx equivalence and grammars

DFA/NFA/RegEx’s can be converted from one form to another using the simple methods discussed in this lecture.

Lecture 5 (Abraham) - Non-regularity and fooling sets

Now that we’ve exhausted regular languages, it’s time to move onto larger complexity classes. But first, how do we tell if a language isn’t regular?

Lecture 6 (Abraham) - Context-Free Languages and Push-down Automata

Now that we know of many non-regular languages, it is time to investigate the next computability class - context-free languages.

Lecture 7 (Abraham) - Turing machine

We’ll skip context-sensitive language and go straight for Turing recognizable languages and the most important machine in modern computing: the Turing machine

Lecture 8 (Abraham) - Universal turing machines

The goal of this lecture to have you internalize one truth - that there is a universal turing machine that can simulate any other turing machine.

No Lecture

(Midterm 1)

Lecture 9 (Kani) - Recursion and reductions

We’ll begin the algorithms portion of our course with a quick recap of the most fundamental algorithmic technique: recursion. We’ll also briefly go over solving recurrences.

Lecture 10 (Kani) - Divide and conquer and selection algorithms

The next step up from recursion are divide and conquer algorithms. Large objective is to understand linear time selection!

Lecture 11 (Kani) - Backtracking

It is time to optimize your recursive algorithms by storing previously computed instance outputs, a.k.a backtracking. We’ll also introduce the longest increasing sub-sequence (LIS) problem.

Lecture 12 (Kani) - Dynamic programming I

Important one … even though you probably won’t see many instances of dynamic programming in the real world, it is a favorite of FANNG interviews.

Lecture 13 (Kani) - Dynamic programming II

We will continue our discussion of dynamic programming with the edit-distance and common subsequence problem(s). We’ll also discuss the general formula for dynamic programming problems.

Lecture 14 (Abraham) - Graphs and basic search

First lecture on graphing algorithms. We’ll discuss basic search and introduce the concept of connected components.

Lecture 15 (Abraham) - Directed graphs, DFS, DAGs, TopSort

We’ll begin our graphing algorithms discussion with directed-acyclic graphs which hold quite a few properties we can exploit.

Lecture 16 (Abraham) - Shortest paths I - BFS and Djikstra

Pretty my the de-facto graphing problem, we’ll discuss simple shortest path algorithms including Djikstra’s algorithm.

Lecture 17 (Abraham) - Shortest paths II - Bellman-Ford and Floyd-Warshall

We’ll continue our discussion of shortest path algorithms with two algorithms which use DP principles as well.

Lecture 18 (Abraham) - Minimum spanning trees (MSTs)

Lastly, we’ll end the algorithms portion of the course with a discussion on minimum spanning tree algorithms.

No Lecture

(Midterm 2)

Lecture 19 (Kani) - Reductions

We’ll begin this section of the course with a discussion of reductions, what various reductions imply and why they are useful. The SAT problem will be introduced as well.

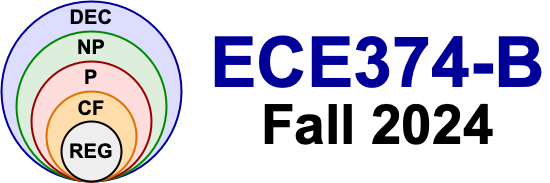

Lecture 20 (Kani) - NP-complete problems and reductions I

We now delve into the hard (NP-hard) problems and investigate how to prove if a specific problem is NP, NP-hard, etc.

Lecture 21 (Abraham) - NP-complete problems and reductions II

We’ll discuss more NP-complete problems/reductions and specifically focus on reductions requiring gadgets.

Lecture 22 (Kani) - Decidability I

Stepping away from algorithms, it is time to ask what problems are not decidable and cannot be solved by a Turing machine. We’ll introduced the halting problem.

Lecture 23 (Abraham) - Decidability II

We’ll go over some more undecidable problems and how to prove their (un-)decidability. We’ll use our familiar reduction method in novel ways.

Lecture 24 (Kani) - MT3 Review

Let’s take a breather before the third midterm and simply go over some lingering questions and/or some practice problems. We’ll also begin with a brief overview of classic decidability problems.

No Lecture

(Midterm 3)

Lecture 25 (Kani) - Final Review

Last lecture. We’ll go over all the concepts discussed in the course using some simple practice problems. Special emphasis on topics not covered the the other exams (MSTs and TMs).

Labs

Lab 0 - Languages and recursive definitions

Some quick problem involving recursive definitions and what a language is and how to describe it.

Lab 1 - Languages and regular expression

Some quick problem involving recursive definitions and regular expressions.

Lab 2 - DFA construction & other automata

Going over how to construct DFAs and how to create formal definitions of other automata.

Lab 3 - Language transformation

Now that NFAs are understood, we can easily prove regular closure for a variety of operations.

Lab 4 - RegEx -> NFA -> DFA -> RegEx

This lab will help you understand that RegEx’s, DFAs and NFAs all represent the same languages.

Lab 5 - Proving non-regularity

Not all languages are regular. We practice a simple proof technique for proving this.

Lab 6 - CFGs and PDAs

The next computability class: context-free languages. Will discuss context-free grammars, push-down automata and how they relate to eachother.

Lab 7 - Turing machines

The most complex computing machine we know of, we’ll go through examples of Turing machines and how they can be used to recognize languages.

No Lab

MT1 Review (OHs)

No Lab

Post MT1 break

Lab 9 - Binary Search

We’ll start our investigation of recursion through binary search and the many variations of the binary search problem.

Lab 10 - Divide and Conquer

We’ll discuss one of the OG divide and conquer algorithms: Kartsuba’s algorithm.

Lab 11 - Backtracking

We’ll continue our work on recursion by practicing how to use backtracking to create optimal recursions. Special emphasis on writing recurrences.

Lab 12 - Dynamic Programming I

Now that we’ve formulated our solutions as recurrences, let’s turn them into efficient algorithms.

Lab 13 - Dynamic Programming II

Second dynamic programming lab. We take classic DP problems and explain them in a new way.

Lab 14 - Graph Modeling

Our first graph algorithms lab. This first lab will be on formulating logic puzzles as graphing problems that can be solved using simple search algos.

Lab 15 - Graph Modeling II

A second graph algorithms lab focusing on formulating problems as graphs and using BFS/DFS to solve them.

Lab 16 - Shortest Paths I

Having learned our first shortest path algorithm (Djikstra’s) we’ll discuss the shortest path problem in a variety of contexts.

Lab 17 - Shortest Paths II

A few more shortest path problem that include both negative edges and cycles potentially requiring algorithms other than Djikstra’s.

Lab 18 - Minimum Spanning Trees

The OG graphing problem, we’ll take a moment to discuss minimum spanning tree problems.

No Lab

Post MT2 break

Lab 19 - Reductions

We begin with a discussion of reductions and show how one can solve novel problems using known solutions from standard problems.

Lab 20 - NP-completeness I

We explore a few simple reductions to prove that a problem is NP-hard. Special emphasis on the SAT problem.

Lab 21 - NP-completeness II

The second lab on using reductions to show NP-hardness. Emphasis will be placed on gadget-based reductions.

Lab 22 - Decidability I

First lab on decidability. We’ll be testing the waters on some simple problems provable using the standard reduction template.

Lab 23 - Decidability II

The second lab on decidability. We’ll prove some other languages are undecidable using slightly more complex proof structures.